Last week I posted a retrospective on swing state polls. However, I excluded Nevada and Arizona as both states still had a significant number of votes to count. Since then, both states have gotten to 99%, so I figured I could include them. I also decided I could probably go back a bit further and looked at all polls going back to September 23rd, when the first early voting started.

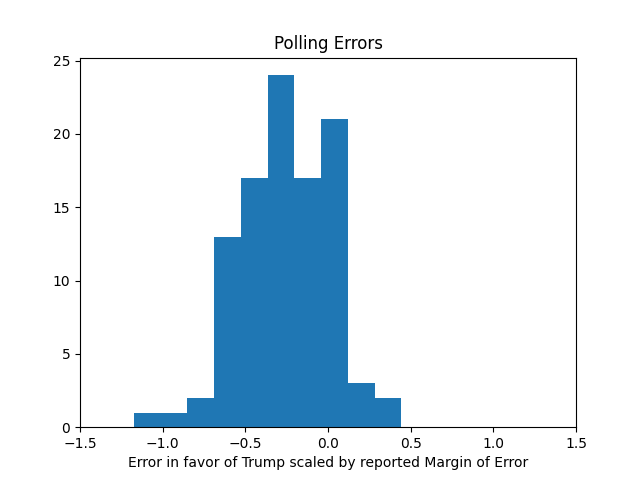

The result? We finally got a poll outside of the margin of error!

This might sound bad, but I would argue it’s at least a step in the right direction. Margin of errors are supposed to represent 95% confidence intervals. With 101 polls analyzed, we would expect around 5 of them to be outside of the margin of error, but only 1 was. So that indicates there probably is some herding going on. And they still show a bias against Trump, which may be due in part to the above mentioned herding.

The one poll that outside the margin of error was a BullFinch Group Michigan poll that had Harris up by 8 percentage points and a margin of error of 4 percentage points. Remember margins of error refer to the error of a particular response; the margin of error of the gap between candidates would be twice that. So this wasn’t that far off. And the pollster specifically called out the Michigan poll as looking like an outlier. They also point out that they specifically try to avoid herding, so I would argue this is a point in favor of this particular poll.

So does this mean the pollsters did a great job?

No.

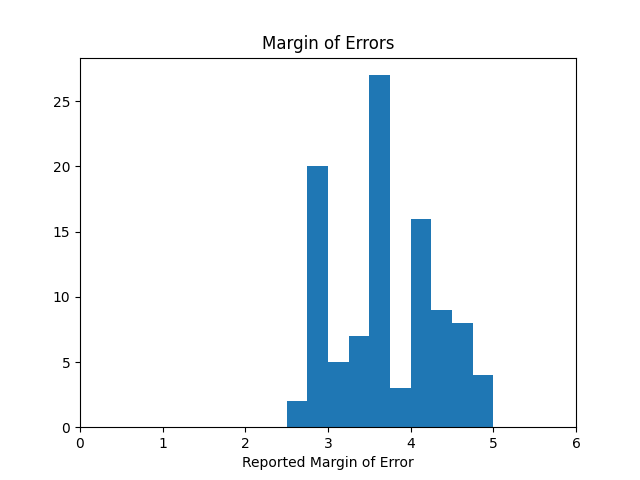

The mean margin of error in these polls was about 3.7 percentage points. The median margin of error was 3.5. That means that for most of these polls, one candidate would need to be up by 7 percentage points in order to say they had a clear lead. Arizona was the biggest blowout of these states, and Trump only won it by 5.5 percentage points. There were only two polls with a margin of error small enough to detect such a lead, and neither was in Arizona. They were two Fox News polls in Pennsylvania and Michigan, and both those races were decided by 2 points or less.

So yes, the pollsters were accurate in that they were within the margin of error. But each and every one of these was nowhere near precise enough to be useful. I praised the BullFinch poll for releasing an outlier poll. And I will stand by that praise. Except, it’s still using a 4 point margin of error. The last time the race in Michigan decided by more than 8 points in one direction was 2012, when Michigan was not considered a swing state. So I’m not sure what the intended purpose of such a poll was.

And it wasn’t even the worst poll. There were several with margin of errors approaching 5 points. Meaning a candidate would need nearly a double digit lead for the poll to be outside the margin of error.

And again, these were all swing states. If a candidate has a double digit lead in a state, that state is probably not a swing state.

Look, I know polling is hard and expensive. Especially these days when people generally don’t pick up the phone if they don’t recognize the number. But really, if your margin of error is this big, I’m not sure how the poll is supposed to be useful. Normally you could argue that you could aggregate the polls and get a more accurate result. But that assumes the polls are more or less independent. And it’s pretty clear they are not. I suspect the culprit is the models pollsters use to do their weighing, but there are also other possibilities.

So while I will defend pollsters against charges that they were inaccurate, that’s only looking at part of the picture. They were accurate only because they had margins of error so high it was almost impossible for them to be inaccurate.